|

America’s nuclear energy situation is a microcosm of the nation’s broader political dysfunction. We are at an impasse, and the debate around nuclear energy is highly polarized, even contemptuous. This political deadlock ensures that a widely disliked status quo carries on unabated. Depending on one’s politics, Americans are left either with outdated reactors and an unrealized potential for a high-energy but climate-friendly society, or are stuck taking care of ticking time bombs churning out another two thousand tons of unmanageable radioactive waste every year

Continue reading at The New Atlantis

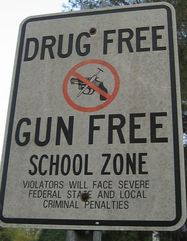

There have been no shortage of (mainly conservative) pundits and politicians suggesting that the path to fewer school shootings is armed teachers—and even custodians. Although it is entirely likely that such recommendations are not really serious but rather meant to distract from calls for stricter gun control legislation, it is still important to evaluate them. As someone who researches and teaches about the causes of unintended consequences, accidents, and disasters for a living, I find the idea that arming public school workers will make children safer highly suspect—but not for the reasons one might think.

If there is one commonality across myriad cases of political and technological mistakes, it would be the failure to acknowledge complexity. Nuclear reactors designed for military submarines were scaled up over an order of magnitude to power civilian power plants without sufficient recognition of how that affected their safety. Large reactors can get so hot that containing a meltdown becomes impossible, forcing managers to be ever vigilant to the smallest errors and install backup cooling systems—which only increased difficult to manage complexities. Designers of autopilot systems neglected to consider how automation hurt the abilities of airline pilots, leading to crashes when the technology malfunctioned and now-deskilled pilots were forced to take over. A narrow focus on applying simple technical solutions to complex problems generally leads to people being caught unawares by ensuing unanticipated outcomes. Debate about whether to put more guns in schools tends to emphasize the solution’s supposed efficacy. Given that even the “good guy with a gun” best positioned to stop the Parkland shooting failed to act, can we reasonably expect teachers to do much better? In light of the fact that mass shootings have even occurred at military bases, what reason do we have to believe that filling educational institutions with armed personnel will reduce the lethality of such incidents? As important as these questions are, they divert our attention to the new kinds of errors produced by applying a simplistic solution—more guns—to a complex problem. A comparison with the history of nuclear armaments should give us pause. Although most American during the Cold War worried about potential atomic war with the Soviets, Cubans, or Chinese, much of the real risks associated with nuclear weapons involve accidental detonation. While many believed during the Cuban Missile Crisis that total annihilation would come from nationalistic posturing and brinkmanship, it was actually ordinary incompetence that brought us closest. Strategic Air Command’s insistence on maintaining U2 and B52 flights and intercontinental ballistic missiles tests during periods of heightened risked a military response from the Soviet Union: pilots invariably got lost and approached Soviet airspace and missile tests could have been misinterpreted to be malicious. Malfunctioning computer chips made NORAD’s screens light up with incoming Soviet missiles, leading the US to prep and launch nuclear-armed jets. Nuclear weapons stored at NATO sites in Turkey and elsewhere were sometimes guarded by a single American soldier. Nuclear armed B52s crashed or accidently released their payloads, with some coming dangerously close to detonation. Much the same would be true for the arming of school workers: The presence and likelihood routine human error would put children at risk. Millions of potentially armed teachers and custodians translates into an equal number of opportunities for a troubled student to steal weapons that would otherwise be difficult to acquire. Some employees are likely to be as incompetent as Michelle Ferguson-Montogomery, a teacher who shot herself in the leg at her Utah school—though may not be so lucky as to not hit a child. False alarms will result not simply in lockdowns but armed adults roaming the halls and, as result, the possibility of children killed for holding cellphones or other objects that can be confused for weapons. Even “good guys” with guns miss the target at least some of the time. The most tragic unintended consequence, however, would be how arming employees would alter school life and the personalities of students. Generations of Americans mentally suffered under Cold War fears of nuclear war. Given the unfortunate ways that many from those generations now think in their old age: being prone to hyper-partisanship, hawkish in foreign affairs, and excessively fearful of immigrants, one worries how a generation of kids brought up in quasi-militarized schools could be rendered incapable of thinking sensibly about public issues—especially when it comes to national security and crime. This last consequence is probably the most important one. Even though more attention ought to be paid toward the accidental loss of life likely to be caused by arming school employees, it is far too easy to endlessly quibble about the magnitude and likelihood of those risks. That debate is easily scientized and thus dominated by a panoply of experts, each claiming to provide an “objective” assessment regarding whether the potential benefits outweigh the risks. The pathway out of the morass lies in focusing on values, on how arming teachers—and even “lockdown” drills— fundamentally disrupts the qualities of childhood that we hold dear. The transformation of schools into places defined by a constant fear of senseless violence turns them into places that cannot feel as warm, inviting, and communal as they otherwise could. We should be skeptical of any policy that promises greater security only at the cost of the more intangible features of life that make it worth living. 10/6/2017 Why the Way We Talk About Politics Will Ensure that Mass Shootings Keep HappeningRead Now

After news broke of the Las Vegas shooting, which claimed some 59 lives, professional and lay observers did not hesitate in trotting out the same rhetoric that Americans have heard time and time again. Those horrified by the events demanded that something be done; indeed, the frequency and scale of these events should be horrifying. Conservatives, in response, emphasized evidence for what they see as the futility of gun control legislation. Yet it is not so much gun control itself that seems futile but rather our collective efforts to accomplish almost any policy change. The Onion satirized America's firearm predicament with the same headline used after numerous other shootings: “‘No Way to Prevent This,’ Says Only Nation Where This Regularly Happens.” Why is it that we Americans seem so helpless to effect change with regard to mass shootings? What explains our inability to collectively act to combat these events?

Political change is, almost invariably, slow and incremental. Although the American political system is, by design, uniquely conservative and biased toward maintaining the status quo, that is not the only reason why rapid change rarely occurs. Democratic politics is often characterized as being composed by a variety of partisan political groups, all vying with one another to get their preferred outcome on any given policy area: that is, as pluralistic. When these different partisan groups are relatively equal and numerous, change is likely to be incremental because of substantial disagreements between these groups and the fact that each only has a partial hold on power. Relative equality among them means that any policy must be a product of compromise and concession—consensus is rarely possible. Advocates of environmental protection, for instance, could not expect to convince governments to immediately dismantle of coal-fired power plants, though they might be able to get taxes, fines, or subsidies adjusted to discourage them; the opposition of industry would prevent radical change. Ideally, the disagreements and mutual adjustments between partisans would lead to a more intelligent outcome than if, say, a benevolent dictator unilaterally decided. While incremental policy change would be expected even in an ideal world of relatively equal partisan groups, things can move even slower when one or more partisan groups are disproportionately powerful. This helps explain why gun control policy—and, indeed, environmental protections, and a whole host of other potentially promising changes—more often stagnates than advances. Businesses occupy a relatively privileged position compared to other groups. While the CEO of Exxon can expect the president’s ear whenever a new energy bill is being passed, average citizens—and even heads of large environmental groups—rarely get the same treatment. In short, when business talks, governments listen. Unsurprisingly the voice of the NRA, which is in essence a lobby group for the firearm industry, sounds much louder to politicians than anyone else’s—something that is clear from the insensitivity of congressional activity to widespread support for strengthening gun control policy. But there is more to it that just that. I am not the first person to point out that the strength of the gun lobby stymies change. Being overly focused the disproportionate power wielded by some in the gun violence debate, we miss the more subtle ways in which democratic political pluralism is itself in decline. Another contributing factor to the slowness of gun policy change is the way Americans talk about issues like gun violence. Most news stories, op-eds, and tweets are laced with references to studies and a plethora of national and international statistics. Those arguing about what should be done about gun violence act as if the main barrier to change has been that not enough people have been informed of the right facts. What is worse is that most participants seem already totally convinced of the rightness of their own version or interpretation of those facts: e.g., employing post-Port Arthur Australian policy in the US will reduce deaths or restrictive gun laws will lead to rises in urban homicides. Similar to two warring nations both believing that they have God uniquely on their side, both sides of the gun control debate lay claim to being on the right side of the facts, if not rationality writ large. The problem with such framings (besides the fact that no one actually knows what the outcome would be until a policy is tried out) is that anyone who disagrees must be ignorant, an idiot, or both. That is, exclusively fact-based rhetoric—the scientizing of politics—denies pluralism. Any disagreement is painted as illegitimate, if not heretical. Such as view leads to a fanatical form of politics: There is the side with “the facts” and the side that only needs informed or defeated, not listened to. If “the facts” have already pre-determined the outcome of policy change, then there is no rational reason for compromise or concession, one is simply harming one’s own position (and entertaining nonsense). If gun control policy is to proceed more pluralistically, then it would seem that rhetorical appeals to the facts would need dispensed with—or at least modified. Given that the uncompromising fanaticism of some of those involved seems rooted in an unwavering certainty regarding the relevant facts, emphasizing uncertainty would likely be a promising avenue. In fact, psychological studies find that asking people to face the complexity of public issues and recognize the limits of their own knowledge leads to less fanatical political positions. Proceeding with a conscious acknowledgement of uncertainty would have the additional benefit of encouraging smarter policy. Guided by an overinflated trust that a few limited studies can predict outcomes in exceedingly complex and unpredictable social systems, policy makers tend to institute rule changes or laws with no explicit role for learning. Despite that even scientific theories are only tentatively true, ready to be turned over by evermore discerning experimental tests or shift in paradigm, participants in the debate act as if events in Australia or Chicago have established eternal truths about gun control. As a result, seldom is it considered that new policies could be tested gradually, background check and registration requirements that become more stringent over time or regional rollouts, with an explicit emphasis on monitoring for effectiveness and unintended consequences—especially consequences for the already marginalized. How Americans debate issues like gun control would be improved in still other ways if the narrative of “the facts” were not so dominant in people’s speech. It would allow greater consideration of values, feelings, and experiences. For instance, gun rights advocates are right to note that semiautomatic “assault” weapons are responsible for a minority of gun deaths, but their narrow focus on that statistical fact prevents them from recognizing that it is not their “objective” danger that motivates their opponents but their political riskiness. The assault rifle, due to its use in horrific mass shootings, has come to symbolize American gun violence writ large. For gun control advocates it is the antithesis of conservatives’ iconography of the flag: It represents everything that is rotten about American culture. No doubt reframing the debate in that way would not guarantee more productive deliberation, but it would at least enable political opponents some means of beginning to understand each others' position. Even if I am at least partly correct in diagnosing what ails American political discourse, there remains the pesky problem of how to treat it. Allusions to “the facts,” attempts to leverage rhetorical appeals to science for political advantage, have come to dominant political discourse over the course of decades—and without anyone consciously intending or dictating it. How to effect movement in the opposite direction? Unfortunately, while some social scientists study these kinds of cultural shifts as they occur throughout history, practically none of them research how beneficial cultural changes could be generated in the present. Hence, perhaps the first change citizens could advocate for would be more publicly responsive and relevant social research. Faced with an increasingly pathological political process and evermore dire consequences from epochal problems, social scientists can no longer afford to be so aloof; they cannot afford to simply observe and analyze society while real harms and injustices continue unabated.

Although Elon Musk's recent cryptic tweets about getting approval to build a Hyperloop system connecting New York and Washington DC are likely to be well received among techno-enthusiasts--many of whom see him as Tony Stark incarnate--there are plenty of reasons to remain skeptical. Musk, of course, has never shied away from proposing and implementing what would otherwise seem to be fairly outlandish technical projects; however, the success of large-scale technological projects depends on more than just getting the engineering right. Given that Musk has provided few signs that he considers the sociopolitical side of his technological undertakings with the same care that he gives the technical aspects (just look at the naivete of his plans for governing a Mars colony), his Hyperloop project is most likely going to be a boondoggle--unless he is very, very lucky.

Don't misunderstand my intentions, dear reader. I wish Mr. Musk all the best. If climate scientists are correct, technological societies ought to be doing everything they can to get citizens out of their cars, out of airplanes, and into trains. Generally I am in favor of any project that gets us one step closer to that goal. However, expensive failures would hurt the legitimacy of alternative transportation projects, in addition to sucking up capital that could be used on projects that are more likely to succeed. So what leads me to believe that the Hyperloop, as currently envisioned, is probably destined for trouble? Musk's proposals, as well as the arguments of many of his cheerleaders, are marked by an extreme degree of faith in the power of engineering calculation. This faith flies in the face of much of the history of technological change, which has primarily been a incremental, trial-and-error affair often resulting in more failures than success stories. The complexity of reality and of contemporary technologies dwarfs people's ability to model and predict. Hyman Rickover, the officer in charge of developing the Navy's first nuclear submarine, described at the length the significant differences between "paper reactors" and "real reactors," namely that the latter are usually behind schedule, hugely expensive, and surprisingly complicated by what would normally be trivial issues. In fact, part of the reason the early nuclear energy industry was such a failure, in terms of safety oversights and being hugely over budget, was that decisions were dominated by enthusiasts and that they scaled the technology up too rapidly, building plants six times larger than those that currently existed before having gained sufficient expertise with the technology. Musk has yet to build a full-scale Hyperloop, leaving unanswered questions as to whether or not he can satisfactorily deal with the complications inherent in shooting people down a pressurized tube at 800 miles an hour. All publicly available information suggests he has only constructed a one-mile mock-up on his company's property. Although this is one step beyond a "paper" Hyperloop, a NY to DC line would be approximately 250 times longer. Given that unexpected phenomena emerge with increasing scale, Musk would be prudent to start smaller. Doing so would be to learn from the US's and Germany's failed efforts to develop wind power in 1980s. They tried to build the most technically advanced turbines possible, drawing on recent aeronautical innovations. Yet their efforts resulted in gargantuan turbines that failed often within tens of operating hours. The Danes, in contrast, started with conventional designs, incrementally scaling up designs andlearning from experience. Apart from the scaling-up problem, Musk's project relies on simultaneously making unprecedented advances in tunneling technology. The "Boring Company" website touts their vision for managing to accomplish a ten-fold decrease in cost through potential technical improvements: increasing boring machine power, shrinking tunnel diameters, and (more dubiously) automating the tunneling process. As a student of technological failure, I would question the wisdom of throwing complex and largely experimental boring technology into a project that is already a large, complicated endeavor that Musk and his employees have too little experience with. A prudent approach would entail spending considerable time testing these new machines on smaller projects with far less financial risk before jumping headfirst into a Hyperloop project. Indeed, the failure of the US space shuttle can be partly attributed to the desire to innovate in too many areas at the same time. Moreover, Musk's proposals seem woefully uninformed about the complications that arise in tunnel construction, many of which can sink a project. No matter how sophisticated or well engineered the technology involved, the success of large-scale sociotechnical projects are incredibly sensitive to unanticipated errors. This is because such projects are highly capital intensive and inflexibly designed. As a result, mistakes increase costs and, in turn, production pressures--which then contributes to future errors. The project to build a 2 mile tunnel to replace the Alaska Way Viaduct, for instance, incurred a two year, quarter billion dollar delay after the boring machine was damaged after striking a pipe casing that went unnoticed in the survey process. Unless taxpayers are forced to pony up for those costs, you can be sure that tunnel tolls will be higher than predicted. It is difficult to imagine how many hiccups could stymie construction on a 250 mile Hyperloop. Such errors will invariably raise the capital costs of the project, costs that would need to be recouped through operating revenues. Given the competition from other trains, driving, and flying, too high of fares could turn the Hyperloop into a luxury transport system for the elite. Concorde anyone? Again, while I applaud Musk's ambition, I worry that he is not proceeding intelligently enough. Intelligently developing something like a Hyperloop system would entail focusing more on his own and his organization's ignorance, avoiding the tendency to become overly enamored with one's own technical acumen. Doing so would also entail not committing oneself too early to a certain technical outcome but designing so as to maximize opportunities for learning as well as ensuring that mistakes are relatively inexpensive to correct. Such an approach, unfortunately, is rarely compatible with grand visions of immediate technical progress, at least in the short-term. Unfortunately, many of us, especially Silicon Valley venture capitalists, are too in love with those grand visions to make the right demands of technologists like Musk.

The stock phrase that “those who do not learn history are doomed to repeat it” certainly seems to hold true for technological innovation. After a team of Stanford University researchers recently developed an algorithm that they say is better at diagnosing heart arrhythmias than a human expert, all the MIT Technology Review could muster was to rhetorically ask if patients and doctors could ever put their trust in an algorithm. I won’t dispute the potential for machine learning algorithms to improve diagnoses; however, I think we should all take issue when journalists like Will Knight depict these technologies so uncritically, as if their claimed merits will be unproblematically realized without negative consequences.

Indeed, the same gee-whiz reporting likely happened during the advent of computerized autopilot in the 1970s—probably with the same lame rhetorical question: “Will passengers ever trust a computer to land a plane?” Of course, we now know that the implementation of autopilot was anything but a simple story of improved safety and performance. As both Robert Pool and Nicholas Carr have demonstrated, the automation of facets of piloting created new forms of accidents produced by unanticipated problems with sensors and electronics as well as the eventual deskilling of human pilots. That shallow, ignorant reporting for similar automation technologies, including not just automated diagnosis but also technologies like driverless cars, continues despite the knowledge of those previous mistakes is truly disheartening. The fact that the tendency to not dig too deeply into the potential undesirable consequences of automation technologies is so widespread is telling. It suggests that something must be acting as a barrier to people’s ability to think clearly about such technologies. The political scientists Charles Lindblom called these barriers “impairments to critical probing,” noting the role of schools and the media in helping to ensure that most citizens refrain from critically examining the status quo. Such impairments to critical probing with respect to automation technologies are visible in the myriad simplistic narratives that are often presumed rather than demonstrated, such as in the belief that algorithms are inherently safer than human operators. Indeed, one comment on Will Knight’s article prophesized that “in the far future human doctors will be viewed as dangerous compared to AI.” Not only are such predictions impossible to justify—at this point they cannot be anything more than wildly speculative conjectures—but they fundamentally misunderstand what technology is. Too often people act as if technologies were autonomous forces in the world, not only in the sense that people act as if technological changes were foreordained and unstoppable but also in how they fail to see that no technology functions without the involvement of human hands. Indeed, technologies are better thought of as sociotechnical systems. Even a simple tool like a hammer cannot existing without underlying human organizations, which provide the conditions for its production, nor can it act in the world without it having been designed to be compatible with the shape and capacities of the human body. A hammer that is too big to be effectively wielded by a person would be correctly recognized as an ill-conceived technology; few would fault a manual laborer forced to use such a hammer for any undesirable outcomes of its use. Yet somehow most people fail to extend the same recognition to more complex undertakings like flying a plane or managing a nuclear reactor: in such cases, the fault is regularly attributed to “human error.” How could it be fair to blame a pilot, who only becomes deskilled as a result of their job requiring him or her to almost exclusively rely on autopilot, for mistakenly pulling up on the controls and stalling the plane during an unexpected autopilot error? The tendency to do so is a result of not recognizing autopilot technology as a sociotechnical system. Autopilot technology that leads to deskilled pilots, and hence accidents, is as poorly designed as a hammer incompatibly large for the human body: it fails to respect the complexities of the human-technology interface. Many people, including many of my students, find that chain of reasoning difficult to accept, even though they struggle to locate any fault with it. They struggle under the weight of the impairing narrative that leads them to assume that the substitution of human action with computerized algorithms is always unalloyed progress. My students’ discomfort is only further provoked when presented with evidence that early automated textile technologies produced substandard, shoddy products—most likely being implemented in order to undermine organized labor rather than to contribute to a broader, more humanistic notion of progress. In any case, the continued power of automation=progress narrative will likely stifle the development of intelligent debate about automated diagnosis technologies. If technological societies currently poised to begin automating medical care are to avoid repeating history, they will need to learn from past mistakes. In particular, how could AI be implemented so as to enhance the diagnostic ability of doctors rather than deskill them? Such an approach would part ways with traditional ideas about how computers should influence the work process, aiming to empower and “informate” skilled workers rather than replace them. As Siddhartha Mukherjee has noted, while algorithms can be very good at partitioning, e.g., distinguishing minute differences between pieces of information, they cannot deduce “why,” they cannot build a case for a diagnosis by themselves, and they cannot be curious. We only replace humans with algorithms at the cost of these qualities. Citizens of technological societies should demand that AI diagnostic systems are used to aid the ongoing learning of doctors, helping them to solidify hunches and not overlook possible alternative diagnoses or pieces of evidence. Meeting such demands, however, may require that still other impairing narratives be challenged, particularly the belief that societies must acquiescence to the “disruptions” of new innovations, as they are imagined and desired by Silicon Valley elites—or the tendency to think of the qualities of the work process last, if at all, in all the excitement over extending the reach of robotics. |

Details

AuthorTaylor C. Dotson is an associate professor at New Mexico Tech, a Science and Technology Studies scholar, and a research consultant with WHOA. He is the author of The Divide: How Fanatical Certitude is Destroying Democracy and Technically Together: Reconstructing Community in a Networked World. Here he posts his thoughts on issues mostly tangential to his current research. Archives

July 2023

Blog Posts

On Vaccine Mandates Escaping the Ecomodernist Binary No, Electing Joe Biden Didn't Save American Democracy When Does Someone Deserve to Be Called "Doctor"? If You Don't Want Outbreaks, Don't Have In-Person Classes How to Stop Worrying and Live with Conspiracy Theorists Democracy and the Nuclear Stalemate Reopening Colleges & Universities an Unwise, Needless Gamble Radiation Politics in a Pandemic What Critics of Planet of the Humans Get Wrong Why Scientific Literacy Won't End the Pandemic Community Life in the Playborhood Who Needs What Technology Analysis? The Pedagogy of Control Don't Shovel Shit The Decline of American Community Makes Parenting Miserable The Limits of Machine-Centered Medicine Why Arming Teachers is a Terrible Idea Why School Shootings are More Likely in the Networked Age Against Epistocracy Gun Control and Our Political Talk Semi-Autonomous Tech and Driver Impairment Community in the Age of Limited Liability Conservative Case for Progressive Politics Hyperloop Likely to Be Boondoggle Policing the Boundaries of Medicine Automating Medicine On the Myth of Net Neutrality On Americans' Acquiescence to Injustice Science, Politics, and Partisanship Moving Beyond Science and Pseudoscience in the Facilitated Communication Debate Privacy Threats and the Counterproductive Refuge of VPNs Andrew Potter's Macleans Shitstorm The (Inevitable?) Exportation of the American Way of Life The Irony of American Political Discourse: The Denial of Politics Why It Is Too Early for Sanders Supporters to Get Behind Hillary Clinton Science's Legitimacy Problem Forbes' Faith-Based Understanding of Science There is No Anti-Scientism Movement, and It’s a Shame Too American Pro Rugby Should Be Community-Owned Why Not Break the Internet? Working for Scraps Solar Freakin' Car Culture Mass Shooting Victims ARE on the Rise Are These Shoes Made for Running? Underpants Gnomes and the Technocratic Theory of Progress Don't Drink the GMO Kool-Aid! On Being Driven by Driverless Cars Why America Needs the Educational Equivalent of the FDA On Introversion, the Internet and the Importance of Small Talk I (Still) Don't Believe in Digital Dualism The Anatomy of a Trolley Accident The Allure of Technological Solipsism The Quixotic Dangers Inherent in Reading Too Much If Science Is on Your Side, Then Who's on Mine? The High Cost of Endless Novelty - Part II The High Cost of Endless Novelty Lock-up Your Wi-Fi Cards: Searching for the Good Life in a Technological Age The Symbolic Analyst Sweatshop in the Winner-Take-All Society On Digital Dualism: What Would Neil Postman Say? Redirecting the Technoscience Machine Battling my Cell Phone for the Good Life Categories

All

|

RSS Feed

RSS Feed