The stock phrase that “those who do not learn history are doomed to repeat it” certainly seems to hold true for technological innovation. After a team of Stanford University researchers recently developed an algorithm that they say is better at diagnosing heart arrhythmias than a human expert, all the MIT Technology Review could muster was to rhetorically ask if patients and doctors could ever put their trust in an algorithm. I won’t dispute the potential for machine learning algorithms to improve diagnoses; however, I think we should all take issue when journalists like Will Knight depict these technologies so uncritically, as if their claimed merits will be unproblematically realized without negative consequences.

Indeed, the same gee-whiz reporting likely happened during the advent of computerized autopilot in the 1970s—probably with the same lame rhetorical question: “Will passengers ever trust a computer to land a plane?” Of course, we now know that the implementation of autopilot was anything but a simple story of improved safety and performance. As both Robert Pool and Nicholas Carr have demonstrated, the automation of facets of piloting created new forms of accidents produced by unanticipated problems with sensors and electronics as well as the eventual deskilling of human pilots. That shallow, ignorant reporting for similar automation technologies, including not just automated diagnosis but also technologies like driverless cars, continues despite the knowledge of those previous mistakes is truly disheartening. The fact that the tendency to not dig too deeply into the potential undesirable consequences of automation technologies is so widespread is telling. It suggests that something must be acting as a barrier to people’s ability to think clearly about such technologies. The political scientists Charles Lindblom called these barriers “impairments to critical probing,” noting the role of schools and the media in helping to ensure that most citizens refrain from critically examining the status quo. Such impairments to critical probing with respect to automation technologies are visible in the myriad simplistic narratives that are often presumed rather than demonstrated, such as in the belief that algorithms are inherently safer than human operators. Indeed, one comment on Will Knight’s article prophesized that “in the far future human doctors will be viewed as dangerous compared to AI.” Not only are such predictions impossible to justify—at this point they cannot be anything more than wildly speculative conjectures—but they fundamentally misunderstand what technology is. Too often people act as if technologies were autonomous forces in the world, not only in the sense that people act as if technological changes were foreordained and unstoppable but also in how they fail to see that no technology functions without the involvement of human hands. Indeed, technologies are better thought of as sociotechnical systems. Even a simple tool like a hammer cannot existing without underlying human organizations, which provide the conditions for its production, nor can it act in the world without it having been designed to be compatible with the shape and capacities of the human body. A hammer that is too big to be effectively wielded by a person would be correctly recognized as an ill-conceived technology; few would fault a manual laborer forced to use such a hammer for any undesirable outcomes of its use. Yet somehow most people fail to extend the same recognition to more complex undertakings like flying a plane or managing a nuclear reactor: in such cases, the fault is regularly attributed to “human error.” How could it be fair to blame a pilot, who only becomes deskilled as a result of their job requiring him or her to almost exclusively rely on autopilot, for mistakenly pulling up on the controls and stalling the plane during an unexpected autopilot error? The tendency to do so is a result of not recognizing autopilot technology as a sociotechnical system. Autopilot technology that leads to deskilled pilots, and hence accidents, is as poorly designed as a hammer incompatibly large for the human body: it fails to respect the complexities of the human-technology interface. Many people, including many of my students, find that chain of reasoning difficult to accept, even though they struggle to locate any fault with it. They struggle under the weight of the impairing narrative that leads them to assume that the substitution of human action with computerized algorithms is always unalloyed progress. My students’ discomfort is only further provoked when presented with evidence that early automated textile technologies produced substandard, shoddy products—most likely being implemented in order to undermine organized labor rather than to contribute to a broader, more humanistic notion of progress. In any case, the continued power of automation=progress narrative will likely stifle the development of intelligent debate about automated diagnosis technologies. If technological societies currently poised to begin automating medical care are to avoid repeating history, they will need to learn from past mistakes. In particular, how could AI be implemented so as to enhance the diagnostic ability of doctors rather than deskill them? Such an approach would part ways with traditional ideas about how computers should influence the work process, aiming to empower and “informate” skilled workers rather than replace them. As Siddhartha Mukherjee has noted, while algorithms can be very good at partitioning, e.g., distinguishing minute differences between pieces of information, they cannot deduce “why,” they cannot build a case for a diagnosis by themselves, and they cannot be curious. We only replace humans with algorithms at the cost of these qualities. Citizens of technological societies should demand that AI diagnostic systems are used to aid the ongoing learning of doctors, helping them to solidify hunches and not overlook possible alternative diagnoses or pieces of evidence. Meeting such demands, however, may require that still other impairing narratives be challenged, particularly the belief that societies must acquiescence to the “disruptions” of new innovations, as they are imagined and desired by Silicon Valley elites—or the tendency to think of the qualities of the work process last, if at all, in all the excitement over extending the reach of robotics. Few issues stoke as much controversy, or provoke as shallow of analysis, as net neutrality. Richard Bennett’s recent piece in the MIT Technology Review is no exception. His views represent a swelling ideological tide among certain technologists that threatens not only any possibility for democratically controlling technological change but any prospect for intelligently and preemptively managing technological risks. The only thing he gets right is that “the web is not neutral” and never has been. Yet current “net neutrality” advocates avoid seriously engaging with that proposition. What explains the self-stultifying allegiance to the notion that the Internet could ever be neutral?

Bennett claims that net neutrality has no clear definition (it does), that anything good about the current Internet has nothing to do with a regulatory history of commitment to net neutrality (something he can’t prove), and that the whole debate only exists because “law professors, public interest advocates, journalists, bloggers, and the general public [know too little] about how the Internet works.” To anyone familiar with the history of technological mistakes, the underlying presumption that we’d be better off if we just let the technical experts make the “right” decision for us—as if their technical expertise allowed them to see the world without any political bias—should be a familiar, albeit frustrating, refrain. In it one hears the echoes of early nuclear energy advocates, whose hubris led them to predict that humanity wouldn’t suffer a meltdown in hundreds of years, whose ideological commitment to an atomic vision of progress led them to pursue harebrained ideas like nuclear jets and using nuclear weapons to dig canals. One hears the echoes of those who managed America’s nuclear arsenal and tried to shake off public oversight, bringing us to the brink of nuclear oblivion on more than one occasion. Only armed with such a poor knowledge of technological history could someone make the argument that “the genuine problems the Internet faces today…cannot be resolved by open Internet regulation. Internet engineers need the freedom to tinker.” Bennett’s argument is really just an ideological opposition to regulation per se, a view based on the premise that innovation better benefits humanity if it is done without the “permission” of those potentially negatively affected. Even though Bennett presents himself as simply a technologist whose knowledge of the cold, hard facts of the Internet leads him to his conclusions, he is really just parroting the latest discursive instantiation of technological libertarianism. As I’ve recently argued, the idea of “permissionless innovation” is built on a (intentional?) misunderstanding of the research on how to intelligently manage technological risks as well as the problematic assumption that innovations, no matter how disruptive, have always worked out for the best for everyone. Unsurprisingly the people most often championing the view are usually affluent white guys who love their gadgets. It is easy to have such a rosy view of the history of technological change when one is, and has consistently been, on the winning side. It is a view that is only sustainable as long as one never bothers to inquire into whether technological change has been an unmitigated wonder for the poor white and Hispanic farmhands who now die at relatively younger ages of otherwise rare cancers, the Africans who have mined and continue to mine Uranium or coltan in despicable conditions, or the permanent underclass created by continuous technological upheavals in the workplace not paired with adequate social programs. In any case, I agree with Bennett’s argument in a later comment to the article: “the web is not neutral, has never been neutral, and wouldn't be any good if it were neutral.” Although advocates for net neutrality are obviously demanding a very specific kind of neutrality: that ISPs do not treat packets differently based on where they originate or where they’re going, the idea of net neutrality has taken on a much broader symbolic meaning, one that I think constrains people’s thinking about Internet freedoms rather than enhances it. The idea of neutrality carries so much rhetorical weight in Western societies because their cultures are steeped in a tradition of philosophical liberalism. Liberalism is a philosophical tradition based in the belief that the freedom of individuals to choose is the greatest good. Even American political conservatives really just embrace a particular flavor of philosophical liberalism, one that privileges the freedoms enjoyed by supposedly individualized actors unencumbered by social conventions or government interference to make market decisions. Politics in nations like the US proceeds with the assumption that society, or at least parts of it, can be composed in such a way to allow individuals to decide wholly for themselves. Hence, it is unsurprising that changes in Internet regulations provoke so much ire: The Internet appears to offer that neutral space, both in terms of the forms of individual self-expression valued by left-liberals and the purportedly disruptive market environment that gives Steve Jobs wannabes wet dreams. Neutrality is, however, impossible. As I argue in my recent book, even an idealized liberal society would have to put constraints on choice: People would have to be prevented from making their relationship or communal commitments too strong. As loathe as some leftists would be to hear it, a society that maximizes citizens’ abilities for individual self-expression would have to be even more extreme than even Margaret Thatcher imagined it: composed of atomized individuals. Even the maintenance of family structures would have to be limited in an idealized liberal world. On a practical level it is easy to see the cultivation of a liberal personhood in children as imposed rather than freely chosen, with one Toronto family going so far as to not assign their child a gender. On plus side for freedom, the child now has a new choice they didn’t have before. On the negative side, they didn’t get to choose whether or not they’d be forced to make that choice. All freedoms come with obligations, and often some people get to enjoy the freedoms while others must shoulder the obligations. So it is with the Internet as well. Currently ISPs are obliged to treat packets equally so that content providers like Google and Netflix can enjoy enormous freedoms in connecting with customers. That is clearly not a neutral arrangement, even though it is one that many people (including Google) prefer. However, the more important non-neutrality of the Internet, one that I think should take center stage in debates, is that it is dominated by corporate interests. Content providers are no more accountable to the public than large Internet service providers. At least since it was privatized in the mid-90s, the Internet has been biased toward fulfilling the needs of business. Other aspirations like improving democracy or cultivating communities, if the Internet has even really delivered all that much in those regards, have been incidental. Facebook wants you to connect with childhood friends so it can show you an ad for a 90s nostalgia t-shirt design. Google wants to make sure neo-nazis can find the Stormfront website so they can advertise the right survival gear to them. I don’t want a neutral net. I want one biased toward supporting well-functioning democracies and vibrant local communities. It might be possible for an Internet to do so while providing the wide latitude for innovative tinkering that Bennett wants, but I doubt it. Indeed, ditching the pretense of neutrality would enable the broader recognition of the partisan divisions about what the Internet should do, the acknowledgement that the Internet is and will always be a political technology. Whose interests do you want it to serve? 5/15/2014 What Was Step Two Again? Underpants Gnomes and the Technocratic Theory of Progress.Read Now Repost from TechnoScience as if People Mattered Far too rarely do most people reflect critically on the relationship between advancing technoscience and progress. The connection seems obvious, if not “natural.” How else would progress occur except by “moving forward” with continuous innovation? Many, if not most, members of contemporary technological civilization seem to possess an almost unshakable faith in the power of innovation to produce an unequivocally better world. Part of the purpose of Science and Technology Studies (STS) scholarship is to precisely examine if, when, how and for whom improved science and technology means progress. Failing to ask these questions, one risks thinking about technoscience like how underpants gnomes think about underwear. Wait. Underpants gnomes? Let me back up for second. The underpants gnomes are characters from the second season of the television show South Park. They sneak into people’s bedrooms at night to steal underpants, even the ones that their unsuspecting victims are wearing. When asked why they collect underwear, the gnomes explain their “business plan” as follows: Step 1) Collect underpants, Step 2) “?”, Step 3) Profit! The joke hinges on the sheer absurdity of the gnomes’ single-minded pursuit of underpants in the face of their apparent lack of a clear idea of what profit means and how underpants will help them achieve it. Although this reference is by now a bit dated, these little hoarders of other people’s undergarments are actually one of the best pop-culture illustrations of the technocratic theory of progress that often undergirds people’s thinking about innovation. The historian of technology Leo Marx described the technocratic idea of progress as: "A belief in the sufficiency of scientific and technological innovation as the basis for general progress. It says that if we can ensure the advance of science-based technologies, the rest will take care of itself. (The “rest” refers to nothing less than a corresponding degree of improvement in the social, political, and cultural conditions of life.)" The technocratic understanding of progress amounts to the application of underpants gnome logic to technoscience: Step 1) Produce innovations, Step 2) “?”, Step 3) Progress! This conception of progress is characterized by a lack of a clear idea of not only what progress means but also how amassing new innovations will bring it about.

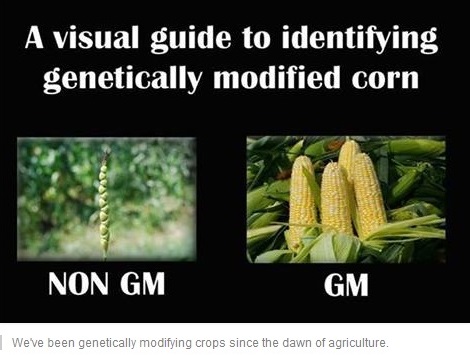

The point of undermining this notion of progress is not to say that improved technoscience does not or could not play an important role in bringing about progress but to recognize that there is generally no logical reason for believing it will automatically and autonomously do so. That is, “Step 2” matters a great deal. For instance, consider the 19th century belief that electrification would bring about a radical democratization of America through the emancipation of craftsmen, a claim that most people today will recognize as patently absurd. Given the growing evidence that American politics functions more like an oligarchy than a democracy, it would seem that wave after wave of supposedly “democratizing” technologies – from television to the Internet – have not been all that effective in fomenting that kind of progress. Moreover, while it is of course true that innovations like the polio vaccine, for example, certainly have meant social progress in the form of fewer people suffering from debilitating illnesses, one should not forget that such progress has been achievable only with the right political structures and decisions. The inventor of the vaccine, Jonas Salk, notably did not attempt to patent it, and the ongoing effort to eradicate polio has entailed dedicated global-level organization, collaboration and financial support. Hence, a non-technocratic civilization would not simply strive to multiply innovations under the belief that some vague good may eventually come out of it. Rather, its members would be concerned with whether or not specific forms of social, cultural or political progress will in fact result from any particular innovation. Ensuring that innovations lead to progress requires participants to think politically and social scientifically, not just technically. More importantly, it would demand that citizens consider placing limits on the production of technoscience that amounts to what Thoreau derided as “improved means to unimproved ends.” Proceeding more critically and less like the underpants gnomes means asking difficult and disquieting questions of technoscience. For example, pursuing driverless cars may lead to incremental gains in safety and potentially free people from the drudgery of driving, but what about the people automated out of a job? Does a driverless car mean progress to them? Furthermore, how sure should one be of the presumption that driverless cars (as opposed to less automobility in general) will bring about a more desirable world? Similarly, how should one balance the purported gains in yield promised by advocates of contemporary GMO crops against the prospects for a greater centralization of power within agriculture? How much does corn production need to increase to be worth the greater inequalities, much less the environmental risks? Moreover, does a new version of the iPhone every six months mean progress to anyone other than Apple’s shareholders and elite consumers? It is fine, of course, to be excited about new discoveries and inventions that overcome previously tenacious technical problems. However, it is necessary to take stock of where such innovations seem to lead. Do they really mean progress? More importantly, whose interests do they progress and how? Given the collective failure to demand answers to these sorts of questions, one has good reason to wonder whether technological civilization really is making progress. Contrary to the vision of humanity being carried up to the heavens of progress upon the growing peaks of Mt. Innovation, it might be that many of us are more like underpants gnomes dreaming of untold and enigmatic profits amongst piles of what are little better than used undergarments. One never knows unless one stops collecting long enough to ask, “What was step two again?” 5/5/2014 Don't Drink the GMO Kool-Aid: Continuity Arguments and Controversial TechnoScienceRead Now Repost from TechnoScience as if People Mattered Far too much popular media and opinion is directed toward getting people to “Drink the Kool-Aid” and uncritically embrace controversial science and technology. One tool from Science and Technology Studies (STS) toolbox that can help prevent you from too quickly taking a swig is the ability to recognize and take apart continuity arguments. Such arguments take the form: Contemporary practice X shares some similarities with past practice Y. Because Y is considered harmless, it is implied that X must also be harmless. Consider the following meme about genetically modified organisms (GMO’s) from the "I Fucking Love Science" Facebook feed: This meme is used to argue that since selective breeding leads to the modification of genes, it is not significantly distinct from altering genetic code via any other method. The continuity of “modifying genetic code” from selective breeding to contemporary recombinant DNA techniques is taken to mean that the latter is no more problematic than the former. This is akin to arguing that exercise and proper diet is no different from liposuction and human-growth-hormone injections: They are all just techniques for decreasing fat and increasing muscle, right?

Continuity arguments, however, are usually misleading and mobilized for thinly veiled political reasons. As certain technology ethicists argue, “[they are] often an immunization strategy, with which people want to shield themselves from criticism and to prevent an extensive debate on the pros and cons of technological innovations.” Therefore, it is unsurprising to see continuity arguments abound within disagreements concerning controversial avenues of scientific research or technological innovation, such as GMO crops. Fortunately for you dear reader, they are not hard to recognize and tear apart. Here are the three main flaws in reasoning that characterize most continuity arguments. To begin, continuity arguments attempt to deflect attention away from the important technical differences that do exist. Selective breeding, for example, differs substantively from more recent genetic modification techniques. The former mimics already existing evolutionary processes: The breeder artificially creates an environmental niche for certain valued traits ensure survival by ensuring the survival and reproduction of only the organisms with those traits. Species, for the most part, can only be crossed if they are close enough genetically to produce viable offspring. One need not be an expert in genetic biology to recognize how the insertion of genetic material from very different species into another via retroviruses or other techniques introduces novel possibilities, and hence new uncertainties and risks, into the process. Second, the argument presumes that the past technoscientific practices being portrayed as continuous with novel ones are themselves unproblematic. Although selective breeding has been pretty much ubiquitous in human history, its application has not been without harm. For instance, those who oppose animal cruelty often take exception to the creation, maintenance and celebration of “pure” breeds. Not only does the cultural production of high status, expensive pedigrees provide a financial incentive for “puppy mills” but also discourages the adoption of mutts from shelters. Moreover, decades or centuries of inbreeding have produced animals that needlessly suffer from multiple genetic diseases and deformities, like boxers that suffer from epilepsy. Additionally, evidence is emerging that historical practices of selective breeding of food crops has rendered many of them much less nutritious. This, of course, is not news to “foodies” who have been eschewing iceberg lettuce and sweet onions for arugula and scallions for years. Regardless, one need not look far to see that even relatively uncontroversial technoscience has its problems. Finally and most importantly, continuity arguments assume away the environmental, social, and political consequences of large-scale sociotechnical change. At stake in the battle over GMO’s, for instance, are not only the potential unforeseen harms via ingestion but also the probable cascading effects throughout technological civilization. There are worries that the overliberal use of pesticides, partially spurred by the development of crops genetically modified to be tolerant of them, is leading to “superweeds” in the same way that the overuse of antibiotics lead to drug-resistant “superbugs.” GMO seeds, moreover, typically differ from traditional ones in that they are designed to “terminate” or die after a period of time. When that fails, Monsanto sues farmers who keep seeds from one season to the next. This ensures that Monsanto and other firms become the obligatory point of passage for doing agriculture, keeping farmers bound to them like sharecroppers to their landowner. On top of that, GMO’s, as currently deployed, are typically but one piece of a larger system of industrialized monoculture and factory farms. Therefore, the battle is not merely about the putative safety of GMO crops but over what kind of food and farm culture should exist: One based on centralized corporate power, lots of synthetic pesticides, and high levels of fossil fuel use along with low levels of biodiversity or the opposite? GMO continuity arguments deny the existence of such concerns. So the next time you hear or read someone claim that some new technological innovation or area of science is the same as something from the past: such as, Google Glasses not being substantially different from a smartphone or genetically engineering crops to be Roundup-ready not being significantly unlike selectively breeding for sweet corn, stop and think before you imbibe. They may be passing you a cup of Googleberry Blast or Monsanto Mountain Punch. Fortunately, you will be able to recognize the cyanide-laced continuity argument at the bottom and dump it out. Your existence as a critical thinking member of technological civilization will depend on it. Repost from TechnoScience as if People Mattered

Despite all the potential risks of driverless cars and the uncertainty of actually realizing their benefits, totally absent from most discussions of this technology is the possibility of rejecting it. On the Atlantic Cities blog, for instance, Robin Chase recently wondered aloud whether a future with self-driving cars will be either heaven or hell. Although it is certainly refreshing that she eschews the techno-idealism and hype that too often pervades writing about technology, she nonetheless never pauses to consider if they really must be “the future.” Other writing on the subject is much less nuanced than even what Chase offers. A writer on the Freakanomics blog breathlessly describes driverless technology as a “miracle innovation” and a “pending revolution.” The implication is clear: Driverless cars are destined to arrive at your doorstep. Why is it that otherwise intelligent people seem to act as if autopiloted automobiles were themselves in the driver’s seat, doing much of the steering of technological development for humanity? The tendency to approach the development of driverless cars fatalistically reflects the mistaken belief that technology mostly evolves according to its own internal logic: i.e., that technology writ large progresses autonomously. With this understanding of technology, humanity’s role, at best, is simply to adapt as best they can and address the unanticipated consequences but not attempt to consciously steer or limit technological innovation. The premise of autonomous technology, however, is undermined by the simple social scientific observation of how technologies actually get made. Which technologies become widespread is as much sociopolitical as technical. The dominance of driving in the United States, for instance, has more to do with the stifling municipal regulation on and crushing debts held by early 20th century transit companies, the Great Depression, the National Highway Act and the schemes of large corporations like GM and Standard Oil to eliminate streetcars than the purported technical desirability of the automobile. Indeed, driverless cars can only become “the future” if regulations allow them on city streets and state highways. Citizens could collectively choose to forgo them. The cars themselves will not lobby legislatures to allow them on the road; only the companies standing to profit from them will. How such simple observations are missed by most people is a reflection of the entrenchment of the idea of autonomous technology in their thinking. Certain technologies only seem fated to dominate technological civilization because most people are relegated to the back seat on the road to “progress,” rarely being allowed to have much say concerning where they are going and how to get there. Whether or not citizens’ lives are upended or otherwise negatively affected by any technological innovation is treated as mainly a matter for engineers, businesses and bureaucrats to decide. The rest of us are just along for the ride. A people-driven, rather than technology or elite-driven, approach to the driverless cars would entail implementing something like what Edward Woodhouse has called the “Intelligent Trial and Error” steering of technology. An intelligent trial and error approach recognizes that, given the complexity and uncertainty surrounding any innovation, promises are often overstated and significant harms overlooked. No one really knows for sure what the future holds. For instance, automating driving might fail to deliver on promised decreases in vehicles on the road and miles driven if it contributes to accelerating sprawl and its lower costs leads to more frequent and frivolous trips and deliveries. The first step to the intelligent steering of driverless car technology would be to involve those who might be negatively affected. Thus far, most of the decision making power lies with less-than-representative political elites and large tech firms, the latter obviously standing to benefit a great deal if and when they get the go ahead. There are several segments of the population likely to be left in the ditch in the process of delivering others to "the future." Drastically lowering the price of automobile travel will undermine the efforts of those who desire to live in more walkable and dense neighborhoods. Automating driving will likely cause the massive unemployment of truck and cab drivers. Current approaches to (poorly) governing technological development are poised to render these groups victims of “progress” rather than participants in it. Including them could open up previously unimagined possibilities, like moving forward with driverless cars only if financial and regulatory support could be suitably guaranteed for redensifying urban areas and the retraining, social welfare and eventual placement in livable wage jobs for the workers made obsolete. Taking the sensible initial precaution of gradually scaling-up developments is another component of intelligent trial and error. For self-driving cars, this would mostly entail more extensive testing than is currently being pursued. The experiences of a few dozen test vehicles in Nevada or California hardly provide any inkling of the potential long-term consequences. Actually having adequate knowledge before proceeding with autonomous automobiles would likely require a larger-scale implementation of them within a limited region for a period of five years if not more than a decade. During this period, this area would need to be monitored by a wide range of appropriate experts, not just tech firms with obvious conflicts of interest. Do these cars promote hypersuburbanization? Are they actually safer, or do aggregations of thousands of programmed cars produce emergent crashes similar to those created by high-frequency trading algorithms? Are vehicle miles really substantially affected? Are citizens any happier or noticeably better off, or do driverless commutes just amount to more unpaid telework hours and more time spent improving one’s Candy Crush score? Doing this kind of testing for autopiloted automobiles would be simply extending the model of the FDA, which Americans already trust to ensure that their drugs cure rather than kill them, to technologies with the potential for equally tragic consequences. If and only if driverless cars were to pass these initial hurdles, a sane technological civilization would then implement them in ways that were flexible and fairly easy to reverse. Mainly this would entail not repeating the early 20th century American mistake of dismantling mass transit alternatives or prohibiting walking and biking through autocentric design. The recent spikes in unconventional fossil fuel production aside, resource depletion and climate change are likely to eventually render autopiloted automobiles an irrational mode of transportation. They depend on the ability to shoot expensive communication satellites into space and maintain a stable electrical grid, both things that growing energy scarcity would make more difficult. If such a future came to pass self-driving cars would end up being the 21st century equivalent of the abandoned roadside statues of Easter Island: A testament to the follies of unsustainable notions of progress. Any intelligent implementation of driverless cars would not leave future citizens with the task of having to wholly dismantle or desert cities built around the assumption of forever having automobiles, much less self-driving ones. There, of course, are many more details to work out. Regardless, despite any inconveniences that an intelligent trial and error process would entail, it would beat what currently passes for technology assessment: Talking heads attempting to predict all the possible promises and perils of a technology while it is increasingly developed and deployed with only the most minimal of critical oversight. There is no reason to believe that the future of technological civilization was irrevocably determined once Google engineers started placing self-driving automobiles on Nevada roads. Doing otherwise would merely require putting the broader public back into the driver’s seat in steering technological development. On my last day in San Diego, I saw a young woman get hit by the trolley. The gasps of other people waiting on the platform prompted me to look up just as she was struck and then dragged for several feet. Did the driver come in too fast? Did he not use his horn? Had she been distracted by her phone? I do not know for certain, though her cracked smartphone was lying next to her motionless body. Good Samaritans, more courageous and likely more competent in first aid than myself, rushed to help her before I got over my shock and dropped my luggage. For weeks afterwards, I kept checking news outlets only to find nothing. Did she live? I still do not know. What I did discover is that people are struck by the trolley fairly frequently, possibly more often than one might expect. Many, like the incident I witnessed, go unreported in the media. Why would an ostensibly sane technological civilization tolerate such a slowly unfolding and piecemeal disaster? What could be done about it?

I do not know of any area of science and technology studies that focuses on the kinds of everyday accidents killing or maiming tens or hundreds of thousands of people every year, even though examples are easy to think of: everything from highway fatalities to firearm accidents. The disasters typically focused on are spectacular events, such as Three Mile Island, Bhopal or the Challenger explosion, where many people die and/or millions bear witness. Charles Perrow, for instance, refers to the nuclear meltdown at Three Mile Island as a “normal accident:” an unpredictable but unavoidable consequence of highly complex and tightly coupled technological systems. Though seemingly unrelated, the tragedy I witnessed was perhaps not so different from a Three Mile Island or a Challenger explosion. The light rail trolley in San Diego is clearly a very complex sociotechnical system relying on electrical grids, signaling systems at grade crossing as well as the social conditioning of behaviors meant to keep riders out of danger. Each passenger as well as could be viewed as component of a large sociotechnical network of which their life is but one component. The young woman I saw, if distracted by her phone, may have been a casualty of a global telecommunications network, dominated by companies interested in keeping customers engrossed in their gadgets, colliding with that of the trolley car. Any particular accident causing the injury or death of a pedestrian might be unpredictable but the design of these systems, now coupled and working at cross purposes, would seem to render accidents in general increasingly inevitable. A common tendency when confronted with an accident, however, is for people to place all the blame on individuals and not on systems. Indeed, after the event I witnessed, many observers made their theories clear. Some blamed the driver for coming too fast. Others claimed the injured young woman was looking at her phone and not her surroundings. A man on the next train I boarded even muttered, “She probably jumped” under his breath. I doubt this line of reasoning is helpful for improving contemporary life, as useful as it might be for witnesses to quickly make sense of tragedy or those most culpable to assuage their guilt. At the end of the day, a young woman either is no longer living or must face a very different life than she envisioned for herself; friends, family and maybe a partner must endure personal heartbreak; and a trolley driver will struggle to live the memory of the incident. Victim blaming likely exacerbates the degree to which the status quo and potentially helpful sociotechnical changes are left unexamined. Indeed, Ford actively used the strategy of blaming individual drivers to distract attention away from the fact that the design of the gas tank in the Pinto was inept and dangerous. The platform where the accident occurred had no advance warning system for arriving trains. It was an elevated platform, which eliminated the need for grade crossings but also had the unintended consequence of depriving riders of the benefit of their flashing red lights and bells. Unlike metros, the trolley trains operate near the grade level of the platform. Riders are often forced to cross the tracks to either exit the platform or switch lines. The trolleys are powered by electricity and are eerily silent, except for a weak horn or bell that is easy to miss if one is not listening for it (and it may often come too late anyway). At the same time, riders are increasingly likely to have headphones on or have highly alluring and distracting devices in their hands or pants pockets. Technologically encouraged “inattention blindness” has been receiving quite a lot of attention as increasingly functional mobile devices flood the market. Apart from concerns about texting while driving and other newly emerging habits, there are worries that such devices have driven the rise in pedestrian and child accidents. British children on average receive their first cell phone at eleven years old, paralleling a three-fold increase in their likelihood of dying or being severely injured on the way to school. Although declining for much of a decade, childhood accident rates have risen in the US over the last few years. Some suggest that smartphones have fueled an increase in accidents stemming from “distracted parenting.” Of course, inattention blindness is not solely a creation of the digital age, one thinks of stories told about Pierre Curie dying after inattentively crossing the street and getting run over by a horse-drawn carriage. Yet, it would definitely be act of intentional ignorance to not note the particular allures of digital gadgetry. What if designers of trolley stations were to presume that riders would likely be distracted, with music blaring in their ears, engrossed in a digital device or simply day dreaming? It seems like a sensible and simple precaution to include lights and audio warnings. The Edmonton LRT, for instance, alerts riders of incoming trains. Physically altering the platform architecture, however, seems prohibitively expensive in the short term. A pedestrian bridge installed in Britain after a teenage girl was struck cost about two million pounds. A more radical intervention might be altering cell phone systems or Wi-Fi networks so that devices are frozen with a warning message when a train is arriving or departing, allowing, of course, for unimpeded phone calls to 911. Yet, the feasibility or existence of potentially helpful technological fixes does not mean they will be implemented. Trolley systems and municipalities may need to be induced or incentived to include them. Given the relative frequency of incidents in San Diego, for instance, it seems that the mere existence of a handful or more injuries or deaths per year is insufficient by itself. I would not want to presume that the San Diego Metropolitan Transit Service is acting like Ford in the Pinto case: intentionally not fixing a dangerous technology because remedying the problem is more expensive than paying settlements with victims. Perhaps it is simply a case of “normalized deviance,” in which an otherwise unusual event is eventually accepted as a natural or normal component of reality. Nevertheless, continuous non-decision has the same consequences as intentional neglect. It is not hard to envision policy changes might lessen the likelihood of similar events in the future. Audible warning devices could be mandated. Federal regulations are too vague on this matter, leaving too much to the discretion of the operator and transit authority. Light rail systems could be evaluated at a regional or national according to their safety and then face fines or subsidy cuts if accident-frequency remains above a certain level. Technologies that could enhance safety could be subsidized to a level that makes implementation a no-brainer for municipalities. Enabling the broadcast to or freezing of certain digital devices on train platforms would clearly require technological changes in addition to political ones. Currently Wi-Fi and cell signals are not treated as public to the same degree as broadcast TV and radio. Broadcasts on the latter two are frequently interrupted in the case of emergencies, but the former are not. Given the declining share of the average Americans media diet that television and radio compose, it seems reasonable to seek to extend the reach and logic of the Emergency Alert System to other media technologies and for other public purposes. Much like the unthinking acceptance of the tens of thousands of lives lost each year on American roads and highways, it would too easy to view accidents like the one I saw as simply a statistical certainty or, even worse, the “price of modernity.” Every accident is a tragedy, a mini-disaster in the life of a person and those connected with them. It is easy to imagine simple design changes and technological interventions that could have reduced the likelihood of such events. They are neither expensive nor require significant advances in technoscientific know-how. A sane technological civilization would not neglect such simple ways of lessening needless human suffering. A recent interview in The Atlantic with a man who believes himself to be in a relationship with two “Real Dolls” illustrates what I think is one of the most significant challenges raised by certain technological innovations as well as the importance of holding on to the idea of authenticity in the face of post-modern skepticism. Much like Evan Selinger’s prescient warnings about the cult of efficiency and the tendency for people to recast traditional forms of civility as onerous inconveniences in response to affordances of new communication technologies, I want to argue that the burdens of human relationships, of which “virtual other” technologies promise to free their users, are actually what makes them valuable and authentic.[1] The Atlantic article consists of an interview with “Davecat,” a man who owns and has “sex” with two Real Dolls – highly realistic-looking but inanimate silicone mannequins. Davecat argues that his choice to pursue relationships with “synthetic humans” is a legitimate one. He justifies his lifestyle preferences by contending that “a synthetic will never lie to you, cheat on you, criticize you, or be otherwise disagreeable.” The two objects of his affection are not mere inanimate objects to Davecat but people with backstories, albeit ones of his own invention. Davecat presents himself as someone fully content with his life: “At this stage in the game, I'd have to say that I'm about 99 percent fulfilled. Every time I return home, there are two gorgeous synthetic women waiting for me, who both act as creative muses, photo models, and romantic partners. They make my flat less empty, and I never have to worry about them becoming disagreeable.” In some ways, Davecat’s relationships with his dolls are incontrovertibly real. His emotions strike him as real, and he acts as if his partners were organic humans. Yet, in other ways, they are inauthentic simulations. His partners have no subjectivities of their own, only what springs from Davecat’s own imagination. They “do” only what he commands them to do. They are “real” people only insofar as they are real to Davecat’s mind and his alone. In other words, Davecat’s romantic life amounts to a technologically afforded form of solipsism. Many fans of post-modernist theory would likely scoff at the mere word, authenticity being as detestable as the word “natural” as well as part and parcel of philosophically and politically suspect dualisms. Indeed, authenticity is not something wholly found out in the world but a category developed by people. Yet, in the end, the result of post-modern deconstruction is not to get to truth but to support an alternative social construction, one ostensibly more desirable to the person doing the deconstructing.  As the philosopher Charles Taylor[2] has outlined, much of contemporary culture and post-modernist thought itself is dominated by an ideal of authenticity no less problematic. That ethic involves the moral imperative to “be true to oneself” and that self-realization and identity are both inwardly developed. Deviant and narcissistic forms of this ethic emerge when the dialogical character of human being is forgotten. It is presumed that the self can somehow be developed independently of others, as if humans were not socially shaped beings but wholly independent, self-authoring minds. Post-modernist thinkers slide toward this deviant ideal of authenticity, according to Taylor, in their heroization of the solitary artist and their tendency to equate being with the aesthetic. One need only look to post-modernist architecture to see the practical conclusions of such an ideal: buildings constructed without concern for the significance of the surrounding neighborhood into which it will be placed or as part of continuing dialogue about architectural significance. The architect seeks only full license to erect a monument to their own ego. Non-narcissistic authenticity, as Taylor seems to suggest, is realizable only in self-realization vis-à-vis the intersubjective engagement with others. As such, Davecat’s sexual preferences for “synthetic humans” do not amount to a sexual orientation as legitimate as those of homosexuals or other queer peoples who have strived for recognition in recent decades. To equate the two is to do the latter a disservice. Both may face forms of social ridicule for their practices but that is where the similarities end. Members of homosexual relationships have their own subjectivities, which each must negotiate and respect to some extent if the relationship itself is to flourish. All just and loving relationships involve give-and-take, compromise and understanding and sometimes, hardship and disappointment. Davecat’s relationship with his dolls is narcissistic because there is no possibility for such a dialogue and the coming to terms with his partners’ subjectivities. In the end, only his own self-referential preferences matter. Relationships with real dolls are better thought of as morally commodified versions of authentic relationships. Borgmann[3] defines a practice as morally commodified “when it is detached from its context of engagement with a time, a place, and a community” (p. 152). Although Davecat engages in a community of “iDollators,” his interactions with his dolls has is detached from the context of engagement typical for human relationships. Much like how mealtimes are morally commodified when replaced by an individualize “refueling” at a fast-food joint or with a microwave dinner, Davecat’s dolls serve only to “refuel” his own psychic and sexual needs at the time, place and manner of his choosing. He does not engage with his dolls but consumes them. At the same time, “virtual other” technologies are highly alluring. They can serve as “techno-fixes” to those lacking the skills or dispositions needed for stable relationships or those without supportive social networks (e.g., the elderly). Would not labeling them as inauthentic demean the experiences of those who need them? Yet, as currently envisioned, Real Dolls and non-sexually-oriented virtual other technologies do not aim to render their users more capable of human relationships or help them become re-established in a community of others but provide an anodyne for their loneliness, an escape from or surrogate for the human social community of which they find themselves on the outside. Without a feasible pathway toward non-solipsistic relationships, the embrace of virtual other technologies for the lonely and relationally inept amounts to giving up on them, which suggests that it is better for them to remain in a state of arrested development. Another danger is well articulated by the psychologist Sherry Turkle.[4] Far from being mere therapeutic aids, such devices are used to hide from the risks of social intimacy and risk altering collective expectations for human relationships. That is, she worries that the standards of efficiency and egoistic control afforded by robots comes to be the standard by which all relationships come to be judged. Indeed, her detailed clinical and observational data belies just such a claim. Rather than being able to simply wave off the normalizing and advancement of Real Dolls and similar technologies as a “personal choice,” Turkle’s work forces one to recognize that cascading cultural consequences result from the technologies that societies permit to flourish. The amount of dollars spent on technological surrogates for social relationships is staggering. The various sex dolls on the market and the robots being bought for nursing homes cost several thousand dollars apiece. If that money could be incrementally redirected, through tax and subsidy, toward building the kinds of material, economic and political infrastructures that have supported community at other places and times, there would be much less need for such techno-fixes. Much like what Michele Willson[5] argues about digital technologies in general, they are technologies “sought to solve the problem of compartmentalization and disconnection that is partly a consequence of extended and abstracted relations brought about by the use of technology” (p. 80). The market for Real Dolls, therapy robots for the elderly and other forms of allaying loneliness (e.g., television) is so strong because alternatives have been undermined and dismantled. The demise of rich opportunities for public associational life and community-centering cafes and pubs has been well documented, hollowed out in part by suburban living and the rise of television. [6] The most important response to Real Dolls and other virtual other technologies is to actually pursue a public debate about what citizens’ would like their communities to look like, how they should function and which technologies are supportive of those ends. It would be the height of naiveté to presume the market or some invisible hand of technological innovation simply provides what people want. As research in Science and Technology Studies make clear, technological innovation is not autonomous, but neither has it been intelligently steered. The pursuit of mostly fictitious and narcissistic relationships with dolls is of questionable desirability, individually and collectively; a civilization that deserves the label of civilized would not sit idly by as such technologies and its champions alter the cultural landscape by which it understands human relationships. ____________________________________ [1] I made many of these same points in an article I published last year in AI & Society, which will hopefully exit “OnlineFirst” limbo and appear in an issue at some point. [2] Taylor, Charles. (1991). The Ethics of Authenticity. Cambridge, MA: Harvard University Press. [3] Borgmann, Albert. (2006). Real American Ethics. Chicago, IL: University of Chicago Press [4] Turkle, Sherry. (2012). Alone Together. New York: Basic Books. [5] Willson, Michele. (2006). Technically Together. New York: Peter Lang. [6] See: Putnam, Robert D. (2000). Bowling Alone. New York: Simon & Schuster and Oldenburg, Ray. (1999). The Great Good Place. Cambridge, MA: Da Capo Press. Evgeny Morozov’s disclosure that he physically locks up his wi-fi card in order to better concentrate on his work spurred an interesting comment-section exchange between him and Nicholas Carr. At the heart of their disagreement is a dispute concerning the malleability of technologies, how this plasticity ought to recognized and dealt with in intelligent discourse about their effects and how the various social problems enabled, afforded or worsened by contemporary technologies could be mitigated. Neither mentions, however, the good life. Carr, though not ignorant of the contingency/plasticity of technology, tends to underplay malleability by defining a technology quite broadly and focusing mainly on their effects on his life and those of others. That is, he can talk about “the Net” doing X, such as contributing to increasingly shallow thinking and reading, because he is assuming and analyzing the Internet as it is presently constituted. Doing this heavy-handedly, of course, opens him up to charges of essentialism: assuming a technology has certain inherent and immutable characteristics. Morozov criticizes him accordingly: “Carr…refuses to abandon the notion of “the Net,” with its predetermined goals and inherent features; instead of exploring the interplay between design, political economy, and information science…” Morozov’s critique reflects the theoretical outlook of a great deal of STS research, particularly the approaches of “social construction of technology” and “actor-network theory.” These scholars hope to avoid the pitfalls of technological determinism – the belief that technology drives history or develops according to its own, and not human, logic – by focusing on the social, economic and political interests and forces that shape the trajectory of a technological development as well as the interpretive flexibility of those technologies to different populations. A constructivist scholar would argue that the Internet could have been quite different than it is today and would emphasize the diversity of ways in which it is currently used.

Yet, I often feel that people like Morozov often go too far and over-state the case for the flexibility of the web. While the Internet could be different and likely will be so in several years, in the short-term its structure and dynamics are fairly fixed. Technologies have a certain momentum to them. This means that most of my friends will continue to “connect” through Facebook whether I like the website or not. Neither is it very likely that an Internet device that aids rather than hinders my deep reading practices will emerge any time soon. Taking this level of obduracy or fixedness into account in one’s analysis is neither essentialism nor determinism, although it can come close. All this talk of technology and malleability is important because a scholar’s view of the matter tends to color his or her capacity to imagine or pursue possible reforms to mitigate many of the undesirable consequences of contemporary technologies. Determinists or quasi-determinists can succumb to a kind of fatalism, whether it be in Heidegger’s lament that “only a god can save us” or Kevin Kelly’s almost religious faith in the idea that technology somehow “wants” to offer human beings more and more choice and thereby make them happy. There is an equal level of risk, however, in overemphasizing flexibility in taking a quasi-instrumentalist viewpoint. One might fall prey to technological “solutionism,” the excessive faith in the potential of technological innovation to fix social problems – including those caused by prior ill-conceived technological fixes. Many today, for instance, look to social networking technologies to ameliorate the relational fragmentation enabled by previous generations of network technologies: the highway system, suburban sprawl and the telephone. A similar risk is the over-estimation of the capacity of individuals to appropriate, hack or otherwise work around obdurate technological systems. Sure, working class Hispanics frequently turn old automobiles into “Low Riders” and French computer nerds hacked the Minitel system into an electronic singles’ bar, but it would be imprudent to generalize from these cases. Actively opposing the materialized intentions of designers requires expertise and resources that many users of any particular technology do not have. Too seldom do those who view technologies as highly malleable ask, “Who is actually empowered in the necessary ways to be able to appropriate this technology?” Generally, the average citizen is not. The difficulty of mitigating fairly obdurate features of Internet technologies is apparent in the incident that I mentioned at the beginning of this post: Morozov regularly locks up his Internet cable and wi-fi card in a timed safe. He even goes so far as to include the screw-drivers that he might use to thwart the timer and access the Internet prematurely. Unsurprisingly, Carr took a lot of satisfaction in this admission. It would appear that some of the characteristics of the Internet, for Morozov, remain quite inflexible to his wishes, since he often requires a fairly involved system and coterie of other technologies in order to allay his own in-the-moment decision-making failures in using it. Of course, Morozov, is not what Nathan Jurgenson insultingly and dismissively calls a “refusenik,” someone refusing to utilize the Internet based on ostensibly problematic assumptions about addiction, or certain ascetic and aesthetic attachments. However, the degree to which he must delegate to additional technologies in order to cope with and mitigate the alluring pull of endless Internet-enabled novelty on his life is telling. Morozov, in fact, copes with the shaping power of Internet technologies on his moral choices as philosopher of technology Peter-Paul Verbeek would recommend. Rather than attempting to completely eliminate an onerous technology from his life, Morozov has developed a tactic that helps him guide his relationship with that technology and its effects on his practices in a more desirable direction. He strives to maximize the “goods” and minimize the “bads.” Because it otherwise persuades or seduces him into distraction, feeding his addiction to novelty, Morozov locks up his wi-fi card so he can better pursue the good life. Yet, these kinds of tactics seem somewhat unsatisfying to me. It is depressing that so much individual effort must be expended in order to mitigate the undesirable behaviors too easily afforded or encouraged by many contemporary technologies. Evan Selinger, for instance, has noted how the dominance of electronically mediated communication increasingly leads to a mindset in which everyday pleasantries, niceties and salutations come to be viewed as annoyingly inconvenient. Such a view, of course, fails to recognize the social value of those seemingly inefficient and superfluous “thank you’s” and “warmest regards’.” Regardless, Selinger is forced to do a great deal more parental labor to disabuse his daughter of such a view once her new iPod affords an alluring and more personally “efficient” alternative to hand-writing her thank-you notes. Raising non-narcissistic children is hard enough without Apple products tipping the scale in the other direction. Internet technologies, of course, could be different and less encouraging of such sociopathological approaches to etiquette or other forms of self-centered behavior, but they are unlikely to be so in the short-term. Therefore, cultivating opposing behaviors or practicing some level of avoidance are not the responses of a naïve and fearful Luddite or “refusenik” but of someone mindful of the kind of life they want (or want their children) to live pursuing what is often the only feasible option available. Those pursuing such reactive tactics, of course, may lack a refined philosophical understanding of why they do what they do, but their worries should not be dismissed as naïve or illogically fearful simply because they struggle to articulate a sophisticated reasoning. Too little attention and too limited of resources are focused on ways to mitigate declines in civility or other technological consequences that ordinary citizens worry about and the works of Carr and Sherry Turkle so cogently expose. Too often, the focus is on never-ending theoretical debates about how to “properly” talk about technology or forever describing all the relevant discursive spaces. More systematically studying the possibilities for reform seems more fruitful than accusations that so-and-so is a “digital dualist,” a charge that I think has more to do with the accused viewing networked technologies unfavorably than their work actually being dualistic. Theoretical distinctions, of course, are important. Yet, at some point neither scholarship nor the public benefits from the linguistic fisticuffs; it is clearly more a matter of egos and the battle over who gets to draw the relevant semantic frontier, outside of which any argument or observation can be considered too insufficiently “nuanced” to be worthy of serious attention. Regardless, barring the broader embrace of systems of technology assessment and other substantive means of formally or informally regulating technologies, some concerned citizen respond to tendency of many contemporary technologies to fragment their lives or distract them from the things they value by refusing to upgrade their phones or unplugging their TVs. Only the truly exceptional, of course, lock them in safes. Yet, the avoidance of technologies that encourage unhealthy or undesirable behaviors is not the sign of some cognitive failing; for many people, it beats acquiescence, and technological civilization currently provides little support for doing anything in between. Many people in well-off, developed nations are afflicted with an acute myopia when it comes to their understanding of technoscience. Everyone knows, of course, that contemporary technoscientists continually produce discoveries and devices that lessen drudgery, limit suffering, and provide comfort and convenience to human lives. However, there is a pervasive failure to see science and technology as not merely contributing solutions to modern social problems but also being one of their most significant causes. Sal Restivo[1], channeling C. Wright Mills, utilizes the metaphor of the science machine. That most people tend to only see the internal mechanisms of this machine leaves them unaware of the fact that the ends to which many contemporary science machines are being directed are anything but objective and value neutral. Contemporary science too easily contributes to the making of social problems because too many people mistakenly believe it to be autonomous and self-correcting, abdicating their own share of responsibility and allowing others direct it for them. Most importantly, science machines are too often steered mainly towards developing profitable treatments of symptoms, and frequently symptoms brought on in part by contemporary technoscience itself, rather than addressing underlying causes. The world of science is often popularly described as a marketplace for ideas. This economic metaphor conjures up an image of science seemingly guided and legitimated by some invisible hand of objectivity. Like markets, it is commonly assumed that science as an institution simply aggregates the activities of individual scientists to provide for an objectively “better” world. Unlike markets, however, scientists are assumed to be disinterested and not motivated by anything other than the desire to pursue unadulterated truth. Nonetheless, in the same way that any respectable scientist would aim to falsify an overly optimistic or unrealistic model of physical phenomena, it behooves social scientists to question such a rosy portrayal of scientific practice. Indeed, this has been the focus of the field of science and technology studies for decades.

Like any human institution, science is rife with inequities of power and influence, and there are many socially-dependent reasons why some avenues of research flourish while others flounder. For instance, why does nanoscience garner so much research attention but “green” chemistry so little? The answer is likely not that funding providers have been thoroughly and unequivocally convinced by the weight of the available evidence; many of the over-hyped promises of nanoscience are not yet anywhere close to being fulfilled. Edward Woodhouse[2] points to a number of reasons. Pertinent to my argument is his observation of the degree of interdependence, double binds, of the chemistry discipline and industry and government with business. Clearly, there are significant barriers to shifting to a novel paradigm for defining “good” chemistry when the “needs” of the current industry shape the curriculum and the narrowness of the pedagogy inhibits the development of a more innovative chemical industry. All the while, business can shape the government’s opinion of which research will be the most profitable and productive, and the most productive research also generally happens to be whatever has the most government backing. Put simply, the trajectory of scientific research is often not directed by scientific motivations or concerns, rather it is generally biased towards maintaining the momentum of the status quo and the interests of industry. The influence of business shapes research paradigms; focus is placed primarily on developments that can be easily marketable to private wants rather than public needs, an observation expanded upon by Woodhouse and Sarewitz[3]. Nanoscientists can promise new drug treatments and individual enhancements that will surely be expensive, although also likely beneficial, for those who can afford them. Yet, it seems that many nanomaterials will likely have toxic and/or carcinogenic effects themselves when released into the environment[4]. A world full of more benign, “green” chemicals, on the other hand, would seem to negate much of the need for some of those treatments, though only by threatening the bottom line of a pharmaceutical industry already adapted to the paradigm of symptom treatment. This illustrates the cruel joke too often played by some areas of contemporary science on the public at large. Technoscientists are busy at work to develop privately profitable treatments for the public health problems caused in part by the chemicals already developed and deployed by contemporary technoscience. It is a supply that succeeds in creating its own demand, and quite a lucrative process at that. Treating underlying causes rather than symptoms is a public good that often comes at private cost, while the current research support structure too frequently converts public tax dollars into private gain. It is not only in the competing paradigms of green chemistry and nanochemistry that this issue arises. Biotechnologists are genetically engineering crops to be more pest and disease resistant by tolerating or producing pesticides themselves, solving problems mostly created by moving to industrial monoculture in the first place. Yet, research into organic farming methods is poorly funded, and there are concerns that such genetic modifications and pesticide use are leading to a decline in the population of pollinating insects that are necessary for agriculture[5]. What might be the next step if biotech/agricultural research continues this dysfunctional trajectory? Genetically engineering pollinating insects to tolerate pesticides or engineering plants to not need pollinating insects at all? What unintended ecological consequences might those developments bring? The process seems to lead further and further to a point at which activities that could be relatively innocuous and straightforward, like maintaining one’s health or growing crops, are increasingly difficult without an ever expanding slew of expensive, invasive, and damaging chemicals and technologies. Goods that were once easily obtainable and cheap, though imperfect, have been transformed into specialized goods available to an ever more select few. However, the breakdown of natural processes into individual components that can each be provided by some new, specialized device or manufactured chemical obviously adds to standard economic measures of growth and progress; more holistic approaches, in comparison, are systematically devalued by such measures. I could go on to note other examples such as how network technologies and psychiatric medicine are used to cope with the contemporary forms of isolation and alienation brought on by practices of sociality increasingly modeled after communication and transportation networks, but the underlying mechanism is the same. If modern technoscience were to be likened to a machine; it would appear be a treadmill. As noted by Woodhouse[6], once technoscientists develop some new capacity it often becomes collectively unthinkable to forgo it. As result, the technoscience machine keeps increasing in speed, and members of technological civilization increasingly struggle to keep up. There are continually new band-aids and techno-fixes being introduced to treat the symptoms caused by previous generations of innovations, band-aids, and techno-fixes. Too little thought, energy, and research funding gets devoted to inquiring into how the dynamics of the science machine could be different: directed towards lessening the likelihood and damage of unintended consequences, removing or replacing irredeemable areas of technoscience, or addressing causes rather than merely treating symptoms. References [1] Restivo, S. (1988). Modern science as a social problem. Social Problems, 35 (3), 206-225. [2] Woodhouse, E. (2005). Nanoscience, green chemistry, and the privileged position of science. In S. Frickel, & K. Moore (Eds.), The new political sociology of science: Insitutions, networks, and power (pp. 148-181). Madison, WI: The University of Wisconsin Press. [3] Woodhouse, E., and Sarewitz, D. (2007). Science policies for reducing societal inequities. Science and Public Policy, 34 (3), 139-150. [4] Becker, H., Herzberg, F., Schulte, A., Kolossa-Gehring, M. (2010). The carcinogenic potential of nanomaterials, their release from products and options for regulating them. International Journal for Hygiene and Environmental Health. 214 (3), 231-238. [5] Suryanarayanan, S., Kleinman, D.L. (2011). Disappearing bess and reluctant regulators. Perspectives in Science and Technology Online, Summer. Retrieved from http://www.issues.org/27.4/p_suryanarayanan.html [6] Woodhouse, E. (2005). Nanoscience, green chemistry, and the privileged position of science. In S. Frickel, & K. Moore (Eds.), The new political sociology of science: Insitutions, networks, and power (pp. 148-181). Madison, WI: The University of Wisconsin Press. |

Details

AuthorTaylor C. Dotson is an associate professor at New Mexico Tech, a Science and Technology Studies scholar, and a research consultant with WHOA. He is the author of The Divide: How Fanatical Certitude is Destroying Democracy and Technically Together: Reconstructing Community in a Networked World. Here he posts his thoughts on issues mostly tangential to his current research. Archives

July 2023

Blog Posts

On Vaccine Mandates Escaping the Ecomodernist Binary No, Electing Joe Biden Didn't Save American Democracy When Does Someone Deserve to Be Called "Doctor"? If You Don't Want Outbreaks, Don't Have In-Person Classes How to Stop Worrying and Live with Conspiracy Theorists Democracy and the Nuclear Stalemate Reopening Colleges & Universities an Unwise, Needless Gamble Radiation Politics in a Pandemic What Critics of Planet of the Humans Get Wrong Why Scientific Literacy Won't End the Pandemic Community Life in the Playborhood Who Needs What Technology Analysis? The Pedagogy of Control Don't Shovel Shit The Decline of American Community Makes Parenting Miserable The Limits of Machine-Centered Medicine Why Arming Teachers is a Terrible Idea Why School Shootings are More Likely in the Networked Age Against Epistocracy Gun Control and Our Political Talk Semi-Autonomous Tech and Driver Impairment Community in the Age of Limited Liability Conservative Case for Progressive Politics Hyperloop Likely to Be Boondoggle Policing the Boundaries of Medicine Automating Medicine On the Myth of Net Neutrality On Americans' Acquiescence to Injustice Science, Politics, and Partisanship Moving Beyond Science and Pseudoscience in the Facilitated Communication Debate Privacy Threats and the Counterproductive Refuge of VPNs Andrew Potter's Macleans Shitstorm The (Inevitable?) Exportation of the American Way of Life The Irony of American Political Discourse: The Denial of Politics Why It Is Too Early for Sanders Supporters to Get Behind Hillary Clinton Science's Legitimacy Problem Forbes' Faith-Based Understanding of Science There is No Anti-Scientism Movement, and It’s a Shame Too American Pro Rugby Should Be Community-Owned Why Not Break the Internet? Working for Scraps Solar Freakin' Car Culture Mass Shooting Victims ARE on the Rise Are These Shoes Made for Running? Underpants Gnomes and the Technocratic Theory of Progress Don't Drink the GMO Kool-Aid! On Being Driven by Driverless Cars Why America Needs the Educational Equivalent of the FDA On Introversion, the Internet and the Importance of Small Talk I (Still) Don't Believe in Digital Dualism The Anatomy of a Trolley Accident The Allure of Technological Solipsism The Quixotic Dangers Inherent in Reading Too Much If Science Is on Your Side, Then Who's on Mine? The High Cost of Endless Novelty - Part II The High Cost of Endless Novelty Lock-up Your Wi-Fi Cards: Searching for the Good Life in a Technological Age The Symbolic Analyst Sweatshop in the Winner-Take-All Society On Digital Dualism: What Would Neil Postman Say? Redirecting the Technoscience Machine Battling my Cell Phone for the Good Life Categories

All

|

RSS Feed

RSS Feed